"Itís easier to fool people than to convince them that theyíve been fooled." – Unknown

Iím an expert on how technology hijacks our psychological vulnerabilities. Thatís why I spent the last three years as a Design Ethicist at Google caring about how to design things in a way that defends a billion peopleís minds from getting hijacked.

When using technology, we often focus optimistically on all the things it does for us. But I want to show you where it might do the opposite.

Where does technology exploit our mindsí weaknesses?

I learned to think this way when I was a magician. Magicians start by looking for blind spots, edges, vulnerabilities and limits of peopleís perception, so they can influence what people do without them even realizing it. Once you know how to push peopleís buttons, you can play them like a piano.

And this is exactly what product designers do to your mind. They play your psychological vulnerabilities (consciously and unconsciously) against you in the race to grab your attention.

I want to show you how they do it.

Western Culture is built around ideals of individual choice and freedom. Millions of us fiercely defend our right to make "free" choices, while we ignore how those choices are manipulated upstream by menus we didnít choose in the first place.

This is exactly what magicians do. They give people the illusion of free choice while architecting the menu so that they win, no matter what you choose. I canít emphasize enough how deep this insight is.

When people are given a menu of choices, they rarely ask:

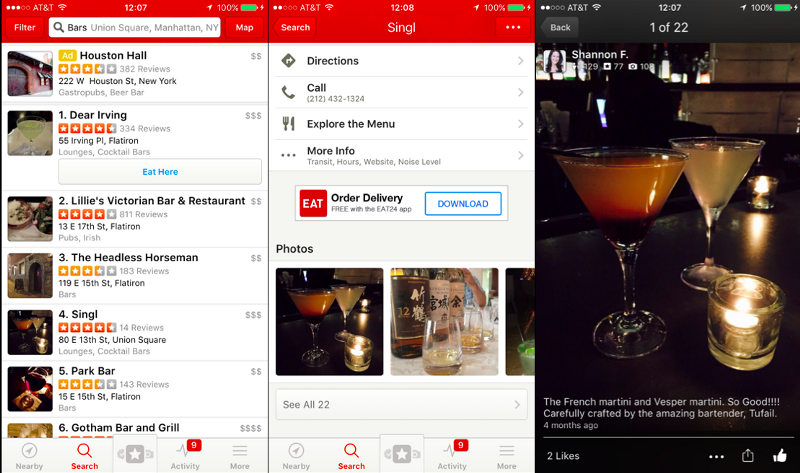

For example, imagine youíre out with friends on a Tuesday night and want to keep the conversation going. You open Yelp to find nearby recommendations and see a list of bars. The group turns into a huddle of faces staring down at their phones comparing bars. They scrutinize the photos of each, comparing cocktail drinks. Is this menu still relevant to the original desire of the group?

Itís not that bars arenít a good choice, itís that Yelp substituted the groupís original question ("where can we go to keep talking?") with a different question ("whatís a bar with good photos of cocktails?") all by shaping the menu.

Moreover, the group falls for the illusion that Yelpís menu represents a complete set of choices for where to go. While looking down at their phones, they donít see the park across the street with a band playing live music. They miss the pop-up gallery on the other side of the street serving crepes and coffee. Neither of those show up on Yelpís menu.

The more choices technology gives us in nearly every domain of our lives (information, events, places to go, friends, dating, jobs) – the more we assume that our phone is always the most empowering and useful menu to pick from. Is it?

The "most empowering" menu is different than the menu that has the most choices. But when we blindly surrender to the menus weíre given, itís easy to lose track of the difference:

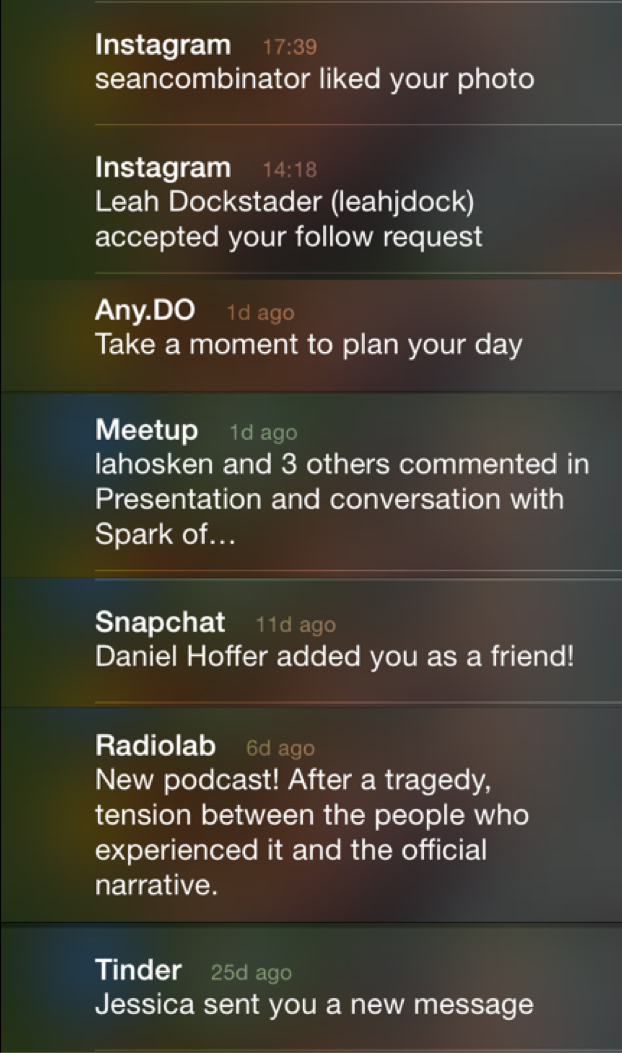

When we wake up in the morning and turn our phone over to see a list of notifications – it frames the experience of "waking up in the morning" around a menu of "all the things Iíve missed since yesterday." (for more examples, see Joe Edelmanís Empowering Design talk)

By shaping the menus we pick from, technology hijacks the way we perceive our choices and replaces them with new ones. But the closer we pay attention to the options weíre given, the more weíll notice when they donít actually align with our true needs.

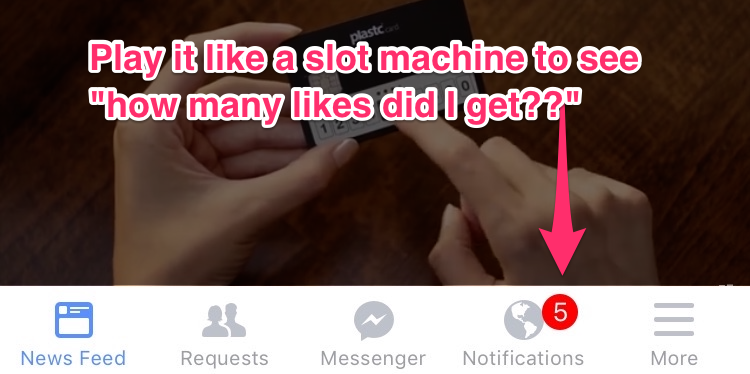

If youíre an app, how do you keep people hooked? Turn yourself into a slot machine.

The average person checks their phone 150 times a day. Why do we do this? Are we making 150 conscious choices?

One major reason why is the #1 psychological ingredient in slot machines: intermittent variable rewards.

If you want to maximize addictiveness, all tech designers need to do is link a userís action (like pulling a lever) with a variable reward. You pull a lever and immediately receive either an enticing reward (a match, a prize!) or nothing. Addictiveness is maximized when the rate of reward is most variable.

Does this effect really work on people? Yes. Slot machines make more money in the United States than baseball, movies, and theme parks combined. Relative to other kinds of gambling, people get "problematically involved" with slot machines 3Ė4x faster according to NYU professor Natasha Dow Schull, author of Addiction by Design.

But hereís the unfortunate truth – several billion people have a slot machine their pocket:

Apps and websites sprinkle intermittent variable rewards all over their products because itís good for business.

But in other cases, slot machines emerge by accident. For example, there is no malicious corporation behind all of email who consciously chose to make it a slot machine. No one profits when millions check their email and nothingís there. Neither did Apple and Googleís designers want phones to work like slot machines. It emerged by accident.

But now companies like Apple and Google have a responsibility to reduce these effects by converting intermittent variable rewards into less addictive, more predictable ones with better design. For example, they could empower people to set predictable times during the day or week for when they want to check "slot machine" apps, and correspondingly adjust when new messages are delivered to align with those times.

Another way apps and websites hijack peopleís minds is by inducing a "1% chance you could be missing something important."

If I convince you that Iím a channel for important information, messages, friendships, or potential sexual opportunities – it will be hard for you to turn me off, unsubscribe, or remove your account – because (aha, I win) you might miss something important:

But if we zoom into that fear, weíll discover that itís unbounded: weíll always miss something important at any point when we stop using something.

But living moment to moment with the fear of missing something isnít how weíre built to live.

And itís amazing how quickly, once we let go of that fear, we wake up from the illusion. When we unplug for more than a day, unsubscribe from those notifications, or go to Camp Grounded – the concerns we thought weíd have donít actually happen.

We donít miss what we donít see.

The thought, "what if I miss something important?" is generated in advance of unplugging, unsubscribing, or turning off – not after. Imagine if tech companies recognized that, and helped us proactively tune our relationships with friends and businesses in terms of what we define as "time well spent" for our lives, instead of in terms of what we might miss.

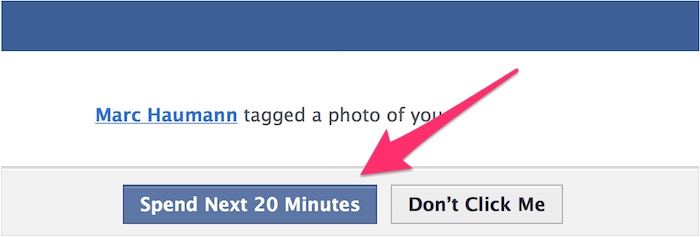

Weíre all vulnerable to social approval. The need to belong, to be approved or appreciated by our peers is among the highest human motivations. But now our social approval is in the hands of tech companies.

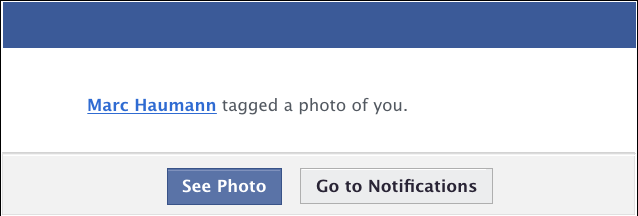

When I get tagged by my friend Marc, I imagine him making a conscious choice to tag me. But I donít see how a company like Facebook orchestrated his doing that in the first place.

Facebook, Instagram or SnapChat can manipulate how often people get tagged in photos by automatically suggesting all the faces people should tag (e.g. by showing a box with a 1-click confirmation, "Tag Tristan in this photo?").

So when Marc tags me, heís actually responding to Facebookís suggestion, not making an independent choice. But through design choices like this, Facebook controls the multiplier for how often millions of people experience their social approval on the line.

The same happens when we change our main profile photo – Facebook knows thatís a moment when weíre vulnerable to social approval: "what do my friends think of my new pic?" Facebook can rank this higher in the news feed, so it sticks around for longer and more friends will like or comment on it. Each time they like or comment on it, weíll get pulled right back.

Everyone innately responds to social approval, but some demographics (teenagers) are more vulnerable to it than others. Thatís why itís so important to recognize how powerful designers are when they exploit this vulnerability.

We are vulnerable to needing to reciprocate othersí gestures. But as with Social Approval, tech companies now manipulate how often we experience it.

In some cases, itís by accident. Email, texting and messaging apps are social reciprocity factories. But in other cases, companies exploit this vulnerability on purpose.

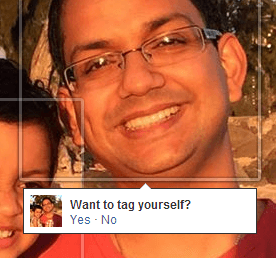

LinkedIn is the most obvious offender. LinkedIn wants as many people creating social obligations for each other as possible, because each time they reciprocate (by accepting a connection, responding to a message, or endorsing someone back for a skill) they have to come back to linkedin.com where they can get people to spend more time.

Like Facebook, LinkedIn exploits an asymmetry in perception. When you receive an invitation from someone to connect, you imagine that person making a conscious choice to invite you, when in reality, they likely unconsciously responded to LinkedInís list of suggested contacts. In other words, LinkedIn turns your unconscious impulses (to "add" a person) into new social obligations that millions of people feel obligated to repay. All while they profit from the time people spend doing it.

Imagine millions of people getting interrupted like this throughout their day, running around like chickens with their heads cut off, reciprocating each other – all designed by companies who profit from it.

Welcome to social media.

Imagine if technology companies had a responsibility to minimize social reciprocity. Or if there was an independent organization that represented the publicís interests – an industry consortium or an FDA for tech – that monitored when technology companies abused these biases?

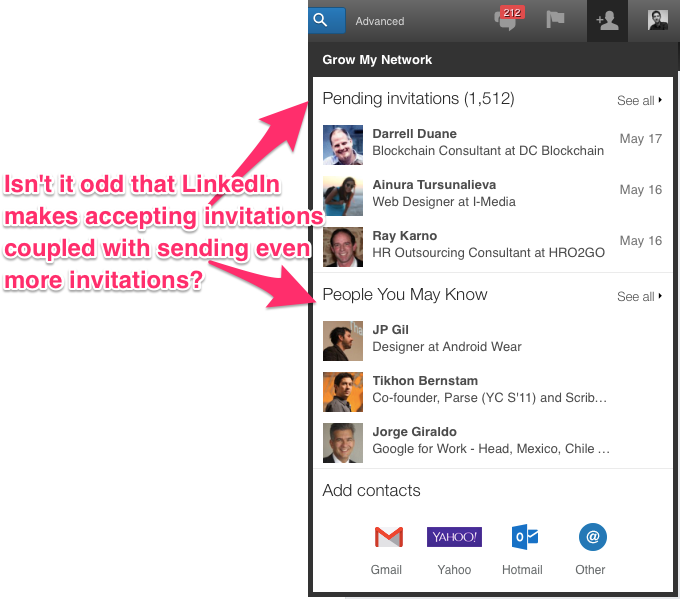

Another way to hijack people is to keep them consuming things, even when they arenít hungry anymore.

How? Easy. Take an experience that was bounded and finite, and turn it into a bottomless flow that keeps going.

Cornell professor Brian Wansink demonstrated this in his study showing you can trick people into keep eating soup by giving them a bottomless bowl that automatically refills as they eat. With bottomless bowls, people eat 73% more calories than those with normal bowls and underestimate how many calories they ate by 140 calories.

Tech companies exploit the same principle. News feeds are purposely designed to auto-refill with reasons to keep you scrolling, and purposely eliminate any reason for you to pause, reconsider or leave.

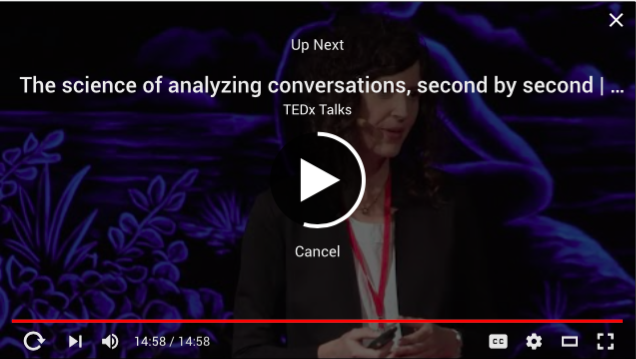

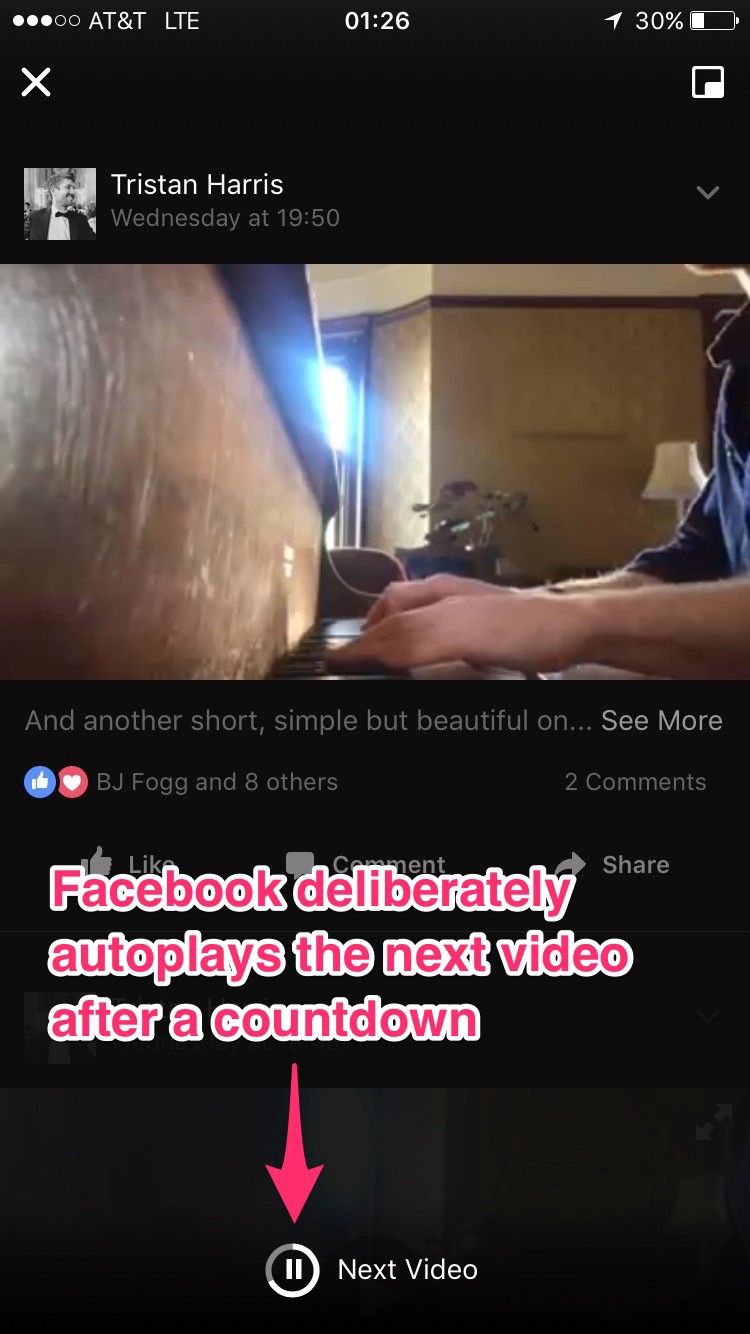

Itís also why video and social media sites like Netflix, YouTube or Facebook autoplay the next video after a countdown instead of waiting for you to make a conscious choice (in case you wonít). A huge portion of traffic on these websites is driven by autoplaying the next thing.

Tech companies often claim that "weíre just making it easier for users to see the video they want to watch" when they are actually serving their business interests. And you canít blame them, because increasing "time spent" is the currency they compete for.

Instead, imagine if technology companies empowered you to consciously bound your experience to align with what would be "time well spent" for you. Not just bounding the quantity of time you spend, but the qualities of what would be "time well spent."

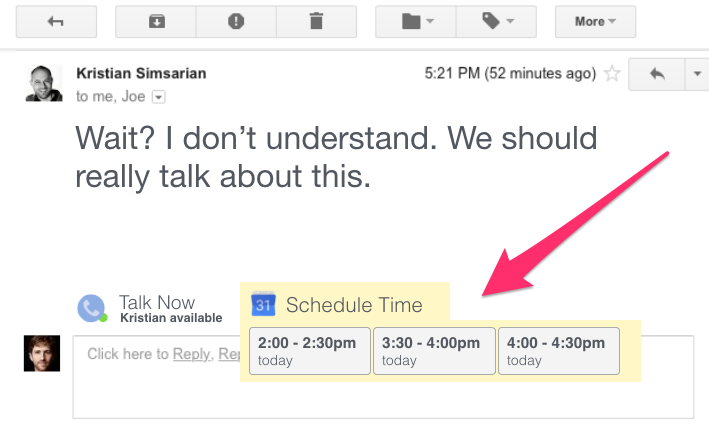

Companies know that messages that interrupt people immediately are more persuasive at getting people to respond than messages delivered asynchronously (like email or any deferred inbox).

Given the choice, Facebook Messenger (or WhatsApp, WeChat or SnapChat for that matter) would prefer to design their messaging system to interrupt recipients immediately (and show a chat box) instead of helping users respect each otherís attention.

In other words, interruption is good for business.

Itís also in their interest to heighten the feeling of urgency and social reciprocity. For example, Facebook automatically tells the sender when you "saw" their message, instead of letting you avoid disclosing whether you read it ("now that you know Iíve seen the message, I feel even more obligated to respond.")

By contrast, Apple more respectfully lets users toggle "Read Receipts" on or off.

The problem is, maximizing interruptions in the name of business creates a tragedy of the commons, ruining global attention spans and causing billions of unnecessary interruptions each day. This is a huge problem we need to fix with shared design standards (potentially, as part of Time Well Spent).

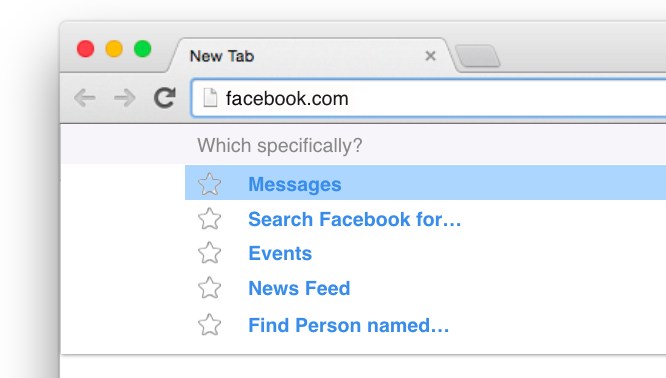

Another way apps hijack you is by taking your reasons for visiting the app (to perform a task) and make them inseparable from the appís business reasons (maximizing how much we consume once weíre there).

For example, in the physical world of grocery stores, the #1 and #2 most popular reasons to visit are pharmacy refills and buying milk. But grocery stores want to maximize how much people buy, so they put the pharmacy and the milk at the back of the store.

In other words, they make the thing customers want (milk, pharmacy) inseparable from what the business wants. If stores were truly organized to support people, they would put the most popular items in the front.

Tech companies design their websites the same way. For example, when you you want to look up a Facebook event happening tonight (your reason) the Facebook app doesnít allow you to access it without first landing on the news feed (their reasons), and thatís on purpose. Facebook wants to convert every reason you have for using Facebook, into their reason which is to maximize the time you spend consuming things.

Instead, imagine if …

In a Time Well Spent world, there is always a direct way to get what you want separately from what businesses want. Imagine a digital "bill of rights" outlining design standards that forced the products used by billions of people to let them navigate directly to what they want without needing to go through intentionally placed distractions.

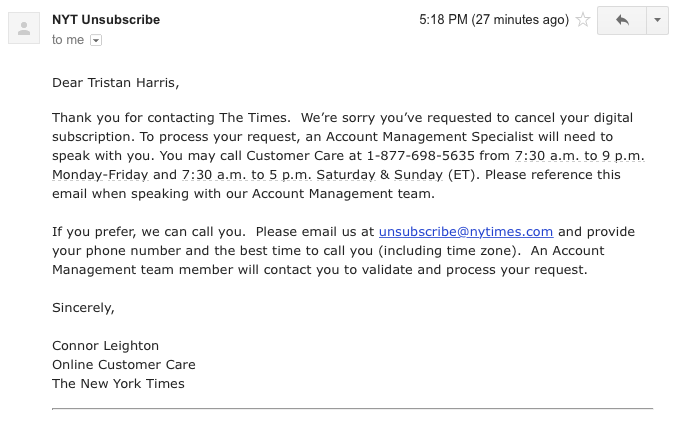

Weíre told that itís enough for businesses to "make choices available."

Businesses naturally want to make the choices they want you to make easier, and the choices they donít want you to make harder. Magicians do the same thing. You make it easier for a spectator to pick the thing you want them to pick, and harder to pick the thing you donít.

For example, NYTimes.com lets you "make a free choice" to cancel your digital subscription. But instead of just doing it when you hit "Cancel Subscription," they send you an email with information on how to cancel your account by calling a phone number thatís only open at certain times.

Instead of viewing the world in terms of availability of choices, we should view the world in terms of friction required to enact choices. Imagine a world where choices were labeled with how difficult they were to fulfill (like coefficients of friction) and there was an independent entity – an industry consortium or non-profit – that labeled these difficulties and set standards for how easy navigation should be.

Lastly, apps can exploit peopleís inability to forecast the consequences of a click.

People donít intuitively forecast the true cost of a click when itís presented to them. Sales people use "foot in the door" techniques by asking for a small innocuous request to begin with ("just one click to see which tweet got retweeted") and escalate from there ("why donít you stay awhile?"). Virtually all engagement websites use this trick.

Imagine if web browsers and smartphones, the gateways through which people make these choices, were truly watching out for people and helped them forecast the consequences of clicks (based on real data about what benefits and costs it actually had?).

Thatís why I add "Estimated reading time" to the top of my posts. When you put the "true cost" of a choice in front of people, youíre treating your users or audience with dignity and respect. In a Time Well Spent internet, choices could be framed in terms of projected cost and benefit, so people were empowered to make informed choices by default, not by doing extra work.

Are you upset that technology hijacks your agency? I am too. Iíve listed a few techniques but there are literally thousands. Imagine whole bookshelves, seminars, workshops and trainings that teach aspiring tech entrepreneurs techniques like these. Imagine hundreds of engineers whose job every day is to invent new ways to keep you hooked.

The ultimate freedom is a free mind, and we need technology thatís on our team to help us live, feel, think and act freely.

We need our smartphones, notifications screens and web browsers to be exoskeletons for our minds and interpersonal relationships that put our values, not our impulses, first. Peopleís time is valuable. And we should protect it with the same rigor as privacy and other digital rights.

Tristan Harris was a Product Philosopher at Google until 2016 where he studied how technology affects a billion peopleís attention, wellbeing and behavior. For more resources on Time Well Spent, see http://timewellspent.io.